8 ML System Design

A software architecture describes what are the main components and how they communicate with each other. Nonfunctional requirements drive architectural decisions, that are the most expensive to change:

- What are the major components in the system? What does each component do?

- Where do the components live? Monolithic vs microservices?

- How do components communicate to each other? Synchronous vs asynchronous calls?

- What API does each component publish? Who can access this API?

- etc…

8.1 Feature Encoding

The feature encoding code used for training must be the same as the code used for encoding runtime data during inference on that model.

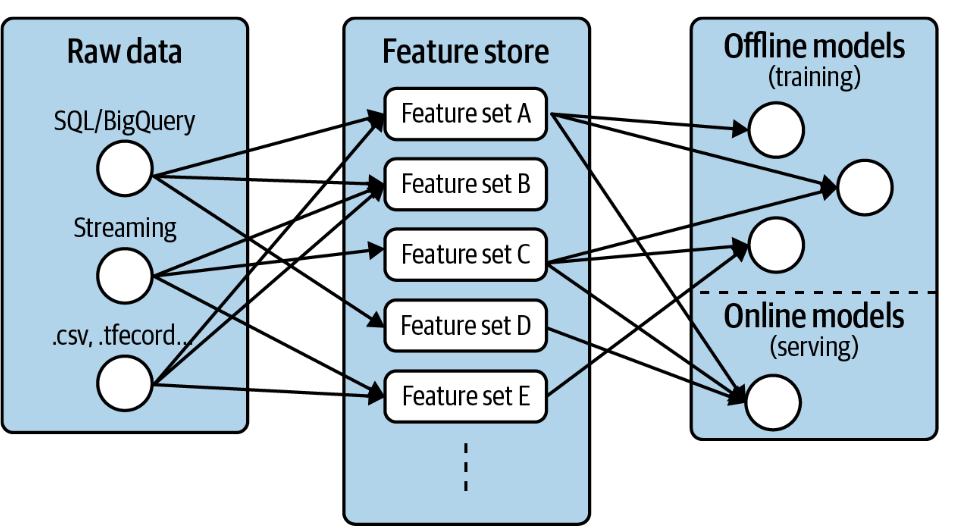

Should feature encoding happen server-side or client-side? The goal is to reuse features across models and projects by decoupling the feature creation process from the development of models using those features. We can use the Feature Store pattern.

8.2 Inference

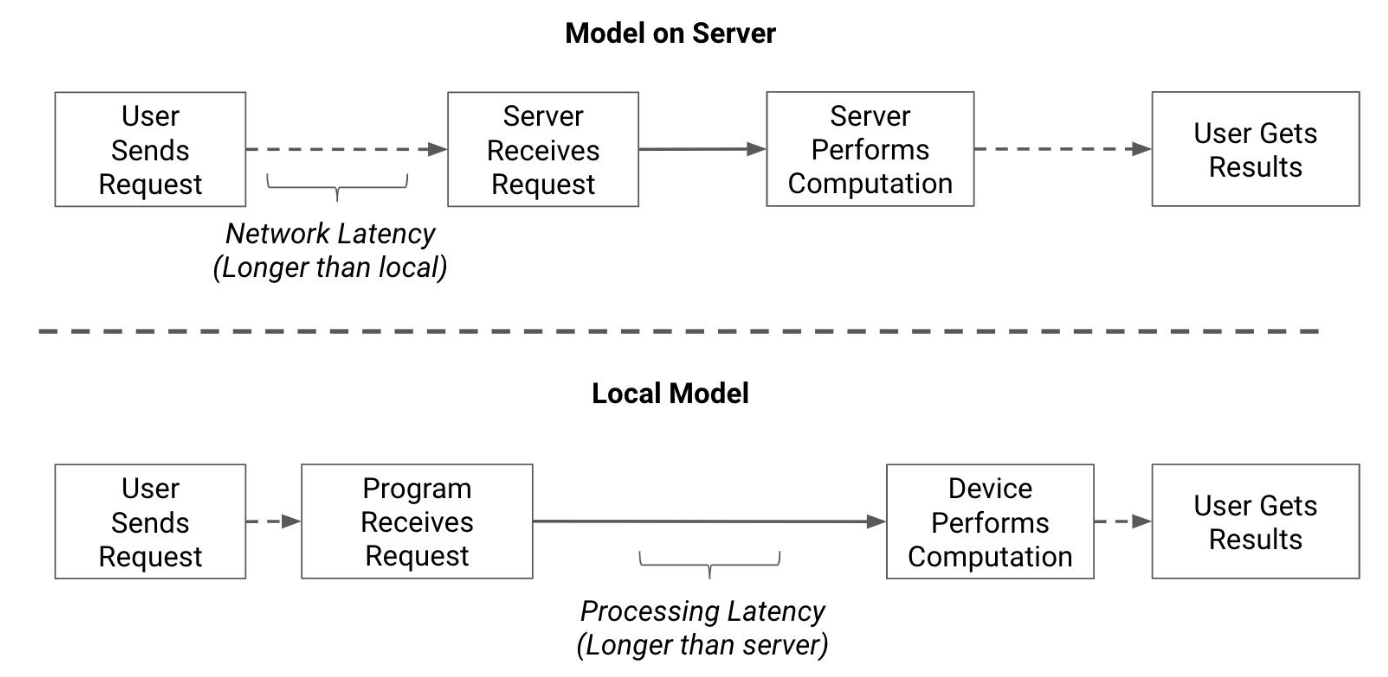

Where does the ML inference happen? Client-side or server-side?

In server-side inference, we have three options:

- Real-time inference: responding to client requests as they arrive, synchronously

- latency is the primary performance measure

- suitable for scenarios where immediate predictions are required

- e.g., image recognition in autonomous vehicles

- Stream inference: prediction operates on incremental data shortly after they become available because of events, asynchronously

- suitable for scenarios where data arrives continuously and predictions need to be made in near real-time

- higher latency than synchronous services but lower than the equivalent batch systems

- Publish/subscribe model, e.g. Apache Kafka

- Batch inference: prediction (jobs) run on large but fixed amounts of data, asynchronously

- suitable for scenarios where real-time predictions are not required

- Big Data frameworks, e.g., Apache Spark, Hadoop MapReduce

In client-side inference, models are still trained on a server and then deployed on the device for inference. Packaged model are delivered as binary from a library or running in the browser using JavaScript.

In federated learning, each user receives a personalized model, while still benefiting from aggregate information about other users, anonymized and aggregated to preserve privacy. For example, in medical domain we can train models on patient data without sharing the data itself.

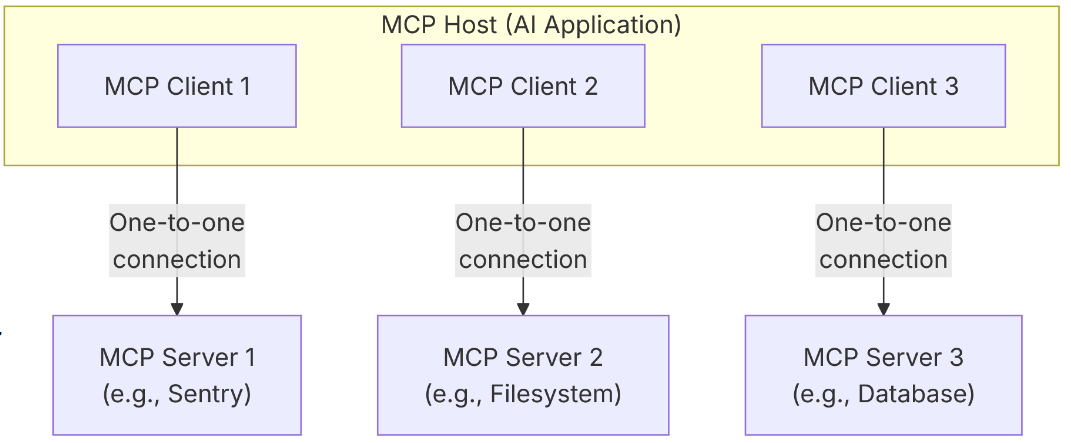

MCP (Model Context Protocol) is an open-source standard for connecting AI applications to external systems. Developers can either expose their data through MCP servers or build AI applications (MCP clients) that connect to these servers. An AI application (MCP host) creates one MCP client for each MCP server, and each MCP client maintains a dedicated one-to-one connection with its corresponding MCP server.