2 Requirements Engineering

Requirements engineering consist in understanding, specifying and managing requirements to minimize the risk of delivering a system that does not meet the stakeholders’ desires and needs.

The results are a collection of functional and non-functional requirements that will drive the design and implementation of the system:

- Functional requirements: describe the functionality of the system to be developed

- Non-functional requirements: define desired qualities of the system to be developed (strong influence on architecture)

How to define requirements for ML systems?

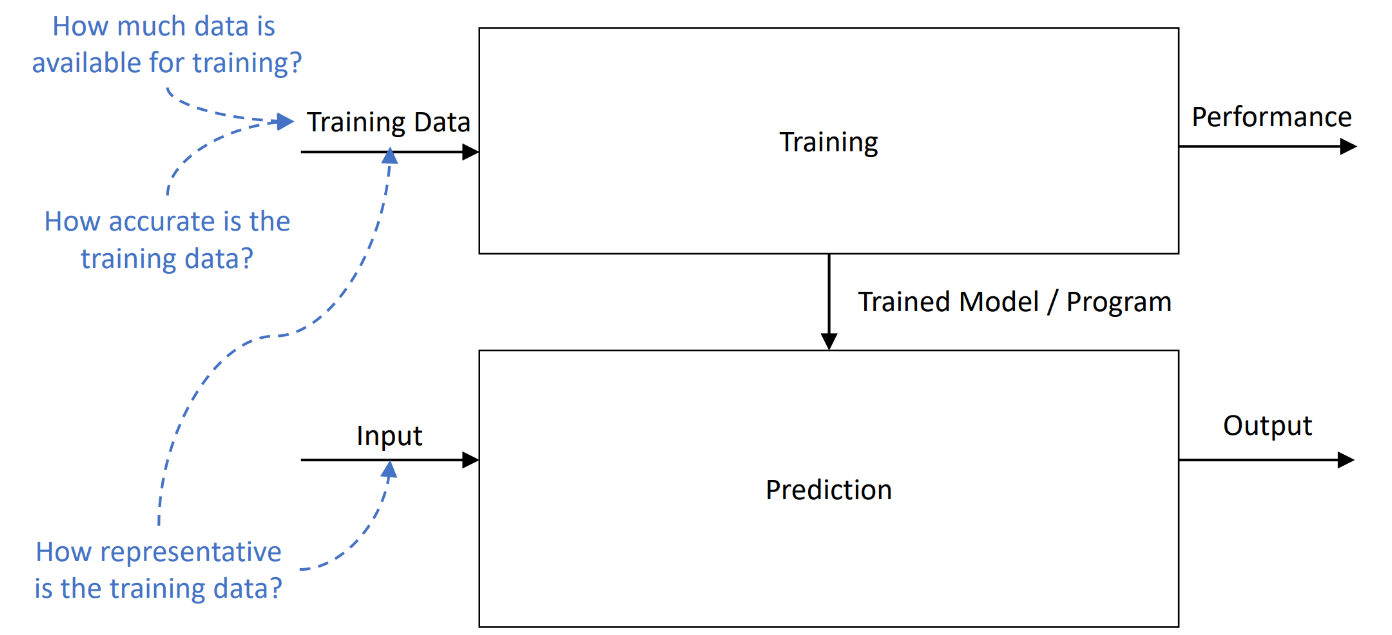

- Data requirements

- Data quantity

- Data quality

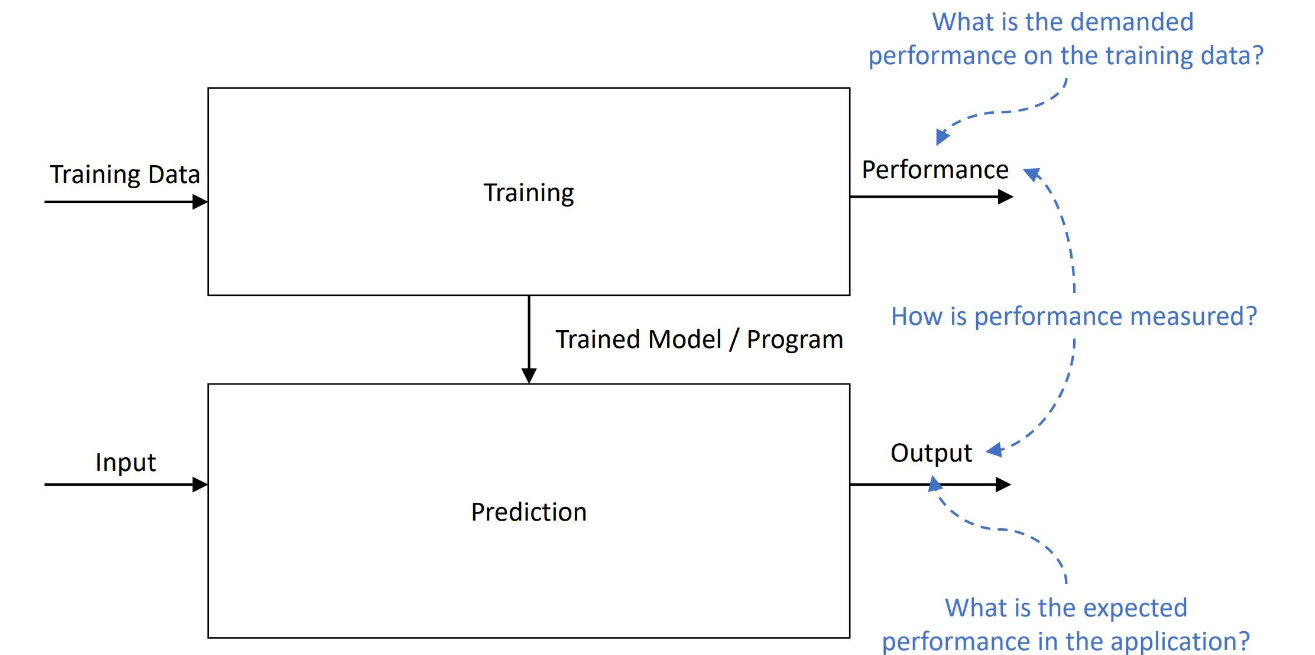

- Quantitative targets (a.k.a. functional requirements)

- Model performance

- Nonfunctional (quality) requirements

How is performance measured? Accuracy, Precision, Recall, F1-Score, ROC-AUC, etc…

Performance requirements for ML systems demand a rigorous analysis of the problem to be solved: we need to find a balance between precision and recall, depending on the application domain (if we are building a spam filter, we want high precision to avoid false positives; if we are building a cancer detection system, we want high recall to avoid false negatives).

As for the non functional requirements, we have new types of qualities for ML systems:

- Fairness (avoid bias)

- Explainability (the ability to provide hints or indication on the reasons why an application made a decision). An algorithm can be interpretable by design (decision trees, logistic regression) or we could need post hoc explainability methods (feature attribution)

- Data privacy regulations: what is the influence of laws and regulations towards data? (GDPR)

- Be informed about data collected or processed

- Access collected data and understand its processing

- Correct inaccurate data

- Be forgotten (i.e., to have data removed)

- Restrict processing of personal data

- Obtain collected data and reuse it elsewhere

- Object to automated decision-making

2.1 Model Requirements Checklist

- Set minimum accuracy expectations

- Identify runtime needs at inference time for the model

- Latency, Inference throughput, cost of operation

- Identify evolution needs for the model

- Frequency of model updates, latency for those updates, cost of training and experimentation, ability to incrementally learn

- Identify explainability needs for the model in the system

- Identify safety and fairness concerns in the system

- Identify how security and privacy concerns in the system relate to the model

- including both legal and ethical concerns

- Understand what data is available

- quantity, quality, formats, provenance

The ML Canvas is a useful tool to capture and document all the requirements for an AI-enabled system.

2.2 EU Guidelines for Trustworthy AI

Trustworthy AI should be:

- lawful: respecting all applicable laws and regulations

- ethical: respecting ethical principles and values

- robust: both from a technical perspective while taking into account its social environment

7 key requirements that trustworthy AI should meet:

- Human agency and oversight

- Technical robustness and safety

- Privacy and data governance

- Transparency

- Diversity, non-discrimination and fairness

- Societal and environmental wellbeing

- Accountability

The AI Act defines different levels of risk for AI systems:

- Minimal risk: applications such as spam filters or video games

- Limited risk: applications that require transparency obligations, such as chatbots

- They need to inform users that they are interacting with an AI system

- High risk: applications that have a significant impact on people’s lives, such as AI systems in medical devices

- They have strict obligations before they can be put on the market

- Unacceptable risk: applications that are considered a threat to safety, livelihoods and rights of people, such as social scoring

- Completely banned