8 Expert Systems: An Overview

8.1 Introduction to Knowledge-Based and Expert Systems

8.1.1 Knowledge-Based Systems

A Knowledge-Based System (KBS) is a system designed to solve problems within a specific, limited domain, aiming for a level of performance comparable to that of a human expert in that same domain.

A program within this framework serves as an environment for representing, utilizing, and modifying a knowledge base. Key properties of such a program include:

- Generality: Capable of solving any problem within its defined domain.

- Explicit Knowledge Representation: Allows for the provision of explanations for its reasoning.

- Reasoning Mechanisms: Employs defined logic to process information.

- Explainability: Can articulate the rationale behind its decisions.

- Adaptability: Able to operate effectively in ill-structured domains.

8.1.2 Expert Systems

An Expert System (ES) is a specialized type of KBS that emulates the behavior of a human expert for a particular problem. It leverages a knowledge base to perform tasks as an expert would, complete with the ability to explain its underlying reasoning processes.

8.1.3 The Knowledge Base

A Knowledge Base (KB) is a formal representation of an expert’s knowledge, capturing both their understanding of the domain and their methods of reasoning.

8.1.4 Purpose and Applications

Common applications include:

- Analysis: E.g., classification of data or situations.

- Synthesis: Deducing new knowledge from available information.

- Simulation: Exploring potential future scenarios and answering “what if” questions.

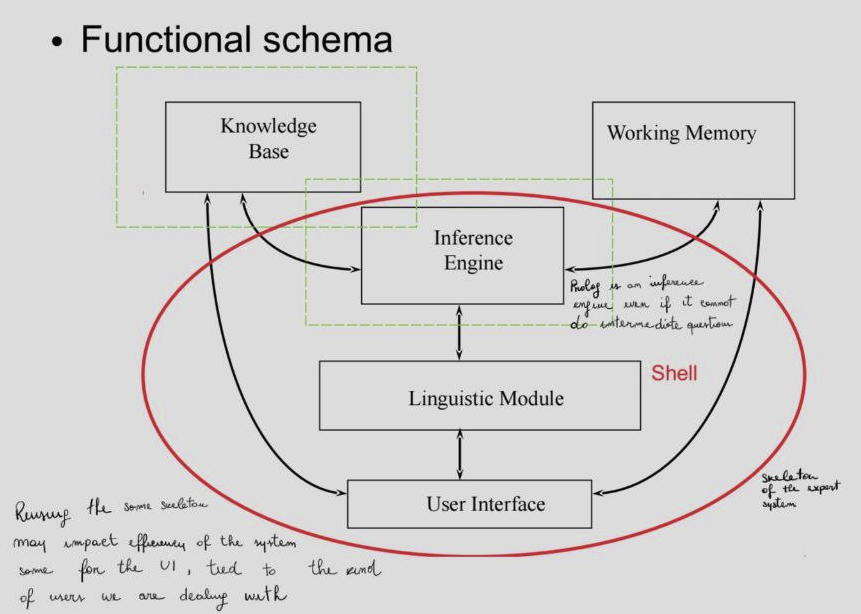

Figure 1: Functional schema of an expert system. The general domain knowledge in the knowledge base is akin to long-term memory, often comprising “consequent IF antecedent” rules. The working memory holds case-specific knowledge, similar to short-term memory.

Figure 1: Functional schema of an expert system. The general domain knowledge in the knowledge base is akin to long-term memory, often comprising “consequent IF antecedent” rules. The working memory holds case-specific knowledge, similar to short-term memory.

8.2 Anatomy of an Expert System

An expert system typically comprises the following components:

- User Interface: Enables users to interact with the system using language appropriate to the application domain, usually in an interactive manner.

- Linguistic Module: Facilitates explanations of the system’s reasoning, clarifying:

- How: The reasoning path taken to reach a conclusion.

- Why: The relevance of specific information required for the reasoning process.

- Inference Engine: Matches facts stored in the working memory with rules and facts in the knowledge base to derive satisfactory or optimal solutions.

- Knowledge Base: Contains the general, long-term knowledge of the domain.

- Working Memory: Stores specific knowledge and facts about the current case under consideration.

8.2.1 Comparison to Human Experts

| Human Expert | Expert System |

|---|---|

| Short-term memory | Working memory |

| Long-term memory | Knowledge base |

| Reasoning | Inference Engine |

| Advised person | User |

8.2.2 Comparison to Traditional Systems

| Traditional Program | Expert System |

|---|---|

| Numeric processing | Symbolic processing |

| Algorithms | Heuristics |

| Integrated information and control | Knowledge separated from control |

| Difficult to modify | Easy to modify |

| Precise information | Can handle uncertain information |

| Command-based interface | Natural dialogue with explanations |

| Final result | Recommendation with explanations |

| Optimal solution | Acceptable solution (often heuristic) |

8.3 Knowledge in Expert Systems

8.3.1 Types of Knowledge

Knowledge within an expert system can be categorized as:

- Public: Widely available and generally accepted knowledge.

- Private: Knowledge specific to an individual expert or a small group.

- Procedural: Knowledge about how to solve a problem (e.g., steps, procedures).

- Declarative: Knowledge about what is known about a problem (e.g., facts, concepts).

- Meta-knowledge: Knowledge about knowledge itself (e.g., reliability of sources, applicability of rules).

- Heuristic: “Shallow” knowledge based on experience, rules of thumb, or educated guesses.

- Structural: Organized knowledge that defines relationships between concepts or entities.

8.4 Reasoning in Expert Systems

The inference engine is central to an expert system’s reasoning capabilities. It employs search strategies to navigate the knowledge base and derive conclusions.

8.4.1 Search Strategies

Two primary search strategies are commonly used:

- Backward Chaining (Goal-Driven, characteristic of Prolog):

- Start from the desired goal (conclusion).

- Apply rules in reverse (from consequent to antecedent).

- Continue until the initial facts describing the problem are found in the working memory.

- Forward Chaining (Data-Driven):

- Place the initial facts describing the problem into the working memory.

- Search for rules whose antecedents (IF parts) are satisfied by the facts in the working memory.

- Choose one such rule and apply its consequent (THEN part), which may add or remove facts from the working memory.

- Terminate when the goal appears in the working memory or no more rules can be applied.

8.5 Building Expert Systems

8.5.1 Development Tools (Shells)

Expert system development often utilizes specialized tools known as “shells”:

- Skeleton Shells: Consist of an inference engine and user interface taken from an existing expert system, but stripped of its domain-specific knowledge. They offer a pre-built framework for a new application.

- Environment Shells: General-purpose development environments that provide tools to create both the interface and the reasoning mechanisms. They typically offer a language for knowledge representation, greater adaptability, and openness to the underlying system. Environments are more flexible but also more complex to use than skeletons.

8.5.2 Development Phases

The construction of an expert system generally follows these phases:

- Definition: Clearly defining the domain of interest and the scope of the problem the ES will address.

- Knowledge Acquisition: Gathering knowledge from human experts. Common methods include:

- Thinking Aloud: Experts verbalize their thought process while solving a problem.

- Interviews: Structured or unstructured discussions with experts.

- Cross-Examination: The knowledge engineer and expert collaboratively discuss and refine topics.

- Design: This phase often follows knowledge acquisition, as the type of inference mechanism is tailored to the acquired knowledge. It involves designing the knowledge representation, inference strategies, and system architecture.

- Testing: Validating the system’s performance, accuracy, and reliability.

- Documentation: Recording the system’s design, functionality, and knowledge base.

- Maintenance: Updating and refining the system as new knowledge becomes available or the domain evolves.

8.6 Using Prolog for Building Expert Systems

Prolog is a powerful programming language that can serve as an “Environment” type development tool for expert systems. It inherently offers a backward chaining reasoning strategy, provides final answers to queries, and operates within a strictly true/false logical framework.

8.6.1 Development Phases in Prolog

When using Prolog, development involves:

A. Knowledge Representation:

- Choosing and defining a suitable formalism for representing domain knowledge.

- Designing an inference engine to exploit this formalism (Prolog’s engine can be extended).

- Adding tools for user interaction.

- Incorporating tools to handle specialized features, such as uncertainty.

B. Design:

- Defining the intended behavior and reasoning process of the expert system.

- Enabling the system to answer “why” questions (explaining the need for certain information).

- Enabling the system to answer “how” questions (explaining the derivation of a conclusion).

8.6.2 Enhanced Backward Reasoning Process in Prolog-based ES

To find an answer (R) to a question (D), an expert system built in Prolog might use an enhanced version of its native backward chainer, applying principles in a specific order:

- Fact Check: If D is available as a fact in the KB, then R = “D is true”.

- Rule Application: If a rule “IF condition THEN D” exists in the KB, check the

condition. The result of this check is used to build R. - Askable Predicates: If D is designated as “askable” (i.e., the system can query the user for it), then ask the user about D.

- Conjunction (D = “D1 and D2”):

- Explore D1.

- If D1 is false, then R = “D is false”.

- Otherwise, explore D2 and combine the answers for D1 and D2 to form R.

- Disjunction (D = “D1 or D2”):

- Explore D1.

- If D1 is true, then R = “D is true”.

- Otherwise, explore D2. Combine answers for D1 and D2 to form R. (Note: Both D1 and D2 might be explored even if D1 is true, to gather more evidence or for comprehensive explanations).

- Negation (D = “not D1”):

- Apply the system’s adopted interpretation for negation (e.g., negation as failure).

Key Differences from Standard Prolog:

- Order of Operations: Typically checks facts before attempting to apply rules.

- Meta-knowledge: Can support knowledge about the knowledge itself.

- Askable Predicates: Allows direct user queries for specific pieces of information.

The system can request information from the user, and conversely, the user can ask for explanations (“why” and “how”).

8.6.3 Key System Procedures in a Prolog ES

Common procedures in a Prolog-based expert system include:

expert: The main procedure that:- Reads the user’s question.

- Initiates the reasoning process.

- Asks the user if they want to see more possible answers, if any exist.

explore(Goal, Trace, Answer):- Purpose: Exploits the knowledge base to find an answer to the

Goal. Goal: The question or claim to be verified, potentially a combination of conjunctions and/or disjunctions of simple claims.Trace: The chain of goals and rules linking the currentGoalto the initial user question. This enables “how” explanations.Answer: The result for the currentGoal, often structured as a proof tree for “how” explanations.

- Purpose: Exploits the knowledge base to find an answer to the

useranswer(Goal, Trace, Answer):- Purpose: An alternative to

explorewhen the KB lacks sufficient information to answer theGoal. It directly asks the user if theGoalis askable. Trace: Still needed because the user might ask “why” the information is being requested.

- Purpose: An alternative to

present(Answer):- Purpose: Shows the user the

Answerto their initial question. If requested, it uses theTraceto explain how the system reached that conclusion.

- Purpose: Shows the user the

8.6.4 Dealing with Uncertainty in Prolog

Standard Prolog operates on a strict true/false basis. To handle the inherent uncertainty in many expert domains, mechanisms must be added.

Approaches to Uncertainty:

- Strict (Probabilistic): Using formal probability theory.

- Informal (Confidence-based): Using concepts like confidence factors, similar to human reasoning or fuzzy logic.

Implementation: Uncertainty can be associated directly with knowledge items:

- Facts can have an additional argument for their confidence.

- Rules can use a reserved predicate to specify their confidence. Example:

h(X) :- p(X, Y), q(Y).becomesh(X) :- p(X, Y), q(Y), prob(0.8).(indicating the rule has a confidence of 0.8).

Fuzzy Logic Semantics for Confidence (c):

- Facts (P1, P2):

c(P1 and P2) = min(c(P1), c(P2))(Confidence of conjunction is limited by the weakest link).c(P1 or P2) = max(c(P1), c(P2))(Confidence of disjunction is determined by the strongest link).c(not P1) = 1 - c(P1)(Confidence of negation is the complement).

- Rules (R: if P1 then P2):

c(P2) = c(P1) * c(R)(Confidence of the consequent is the product of the antecedent’s confidence and the rule’s confidence).

Alternative: N/S Factors (Necessity and Sufficiency) Another method involves modeling how evidence (E) affects the expectation of a hypothesis (H) using two parameters:

- N (Necessary Factor): If E is false, a lower N makes H less probable.

- S (Sufficient Factor): If E is true, a higher S makes H more probable.

8.6.5 Control Strategy in Prolog Expert Systems

The control strategy dictates the order in which rules are applied. While Prolog has a default backward chaining strategy, it can be customized or other strategies can be implemented.

Implementing Forward Chaining: A pure forward chaining strategy can be implemented by:

- Representing rules and facts using meta-predicates:

fact(p(a,b)).for a factp(a,b).rule(Head, BodyList).for a ruleHead :- Body., whereBodyListis a list of literals in the body. Example:g(X) :- p(X, Y), not(f(Y)).becomesrule(g(X), [p(X, Y), not(f(Y))]).

- Looping through facts:

- Read a fact.

- For all rules where this fact appears in the body, remove it from their

BodyList. - If a rule’s

BodyListbecomes empty, itsHeadbecomes a new fact. - The original rule is replaced (either with the new fact or the modified rule).

- Managing used facts:

- A fact, once used to trigger rules, should not be reconsidered in the same iteration. It can be removed and reasserted using a meta-predicate like

usedfact/1to indicate it has been processed but remains valid knowledge. - Ultimately, all conclusions derived will be represented as

fact/1orusedfact/1.

- A fact, once used to trigger rules, should not be reconsidered in the same iteration. It can be removed and reasserted using a meta-predicate like

Efficiency Considerations:

- Indexing: Prolog automatically indexes clauses based on their head predicate and arity. For specific needs, custom indexing can be implemented.

- A predicate like

index(Predicate, ListOfRules)can create a list of rules relevant toPredicate, allowing quicker access. The index could be a list of pairs(Predicate, [Rules]).

- A predicate like

Customizing Rule Order:

- Prolog typically tries rules in the order they appear in the source code. This can be altered using a meta-predicate like

prefer(Head1, Body1, Head2, Body2). This predicate compares two candidate rules (defined by their head and body) and implements an evaluation function to choose the preferred rule for a given situation.

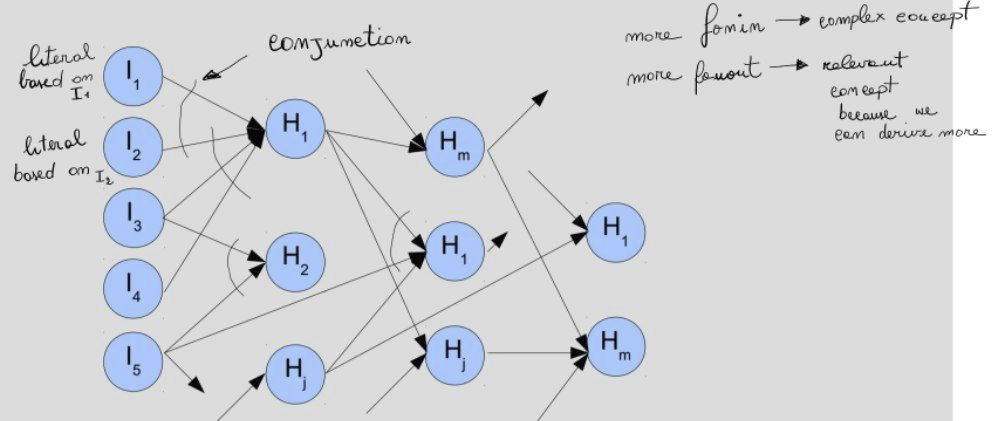

8.6.6 Interpretable Knowledge Representation: AND-OR Graphs

An AND-OR graph is a directed acyclic graph (DAG) that offers an intuitive way to represent knowledge, especially rule structures:

- Nodes: Represent concepts or predicates.

- Arcs: Represent preconditions.

- AND-arcs: Indicate that all connected sub-goals/conditions must be true.

- OR-arcs: Indicate that at least one of the connected sub-goals/conditions must be true.

Figure 2: Example of an AND-OR graph.

Figure 2: Example of an AND-OR graph.